# On the logic of "it" - Sparse (hopefully-)not-so-metaphysical thoughts

The idea of a Characteristica Universalis, i.e. an encyclopedic compendium of *acquired knowledge* in terms of a universal formal *language* that is potentially able to express all *understanding*, becomes in our times the inverse idea that *language* encapsulates all there is to *understanding* (whence e.g. the idea/hope that so called Large Language Models are a "solution to problems").

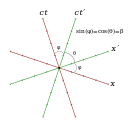

But the simplest formalization exercise indicates that between *language* and *understanding* (or, language and "reality") there is an essential gap that only *imagination* can fill.

What does this expression *mean*: `fun x y => x + y` ?

What does this expression *mean*: `a^$2 <>= T` ?

What does this expression *mean*: `2` ?

Sure, we might *explain* each of those, but as long as the explanation is itself formal, we still need to find a bottom to it (what does "it" *mean*?). Eventually, the problem of meaning remains at the level of our formal *primitives*.

And that is properly our *symbolic* level, the one that simply "can-/will *not* be explained". I.e. not any more than its own shape is its own *diagram* (or that "(its) nature is its own explanation"): ultimately, the idea/hope being, we cannot explain it but we can understand it to the point of *naming* it.

Indeed, a principle is "let code speak for itself", which means our formal language should be as readable as a natural language, just without the ambiguities, as the formal aspect "just" takes care that *code* cannot lie about *itself* (about its assumptions as well as derivations).

So, I think Leibniz's formal-encyclopedic project is by now doable, with applications potentially up to the legal context. On the other hand, and exactly for reasons of concreteness and applicability, I do *not* think *we* can get away without the umbrella of a (trustless, hopefully) *contract*, where "it" takes the form of a (collaborative, hopefully) *game*-theoretic protocol, for *the acquisition, revision, consolidation and exercise of mutually informed and formally verified knowledge*.